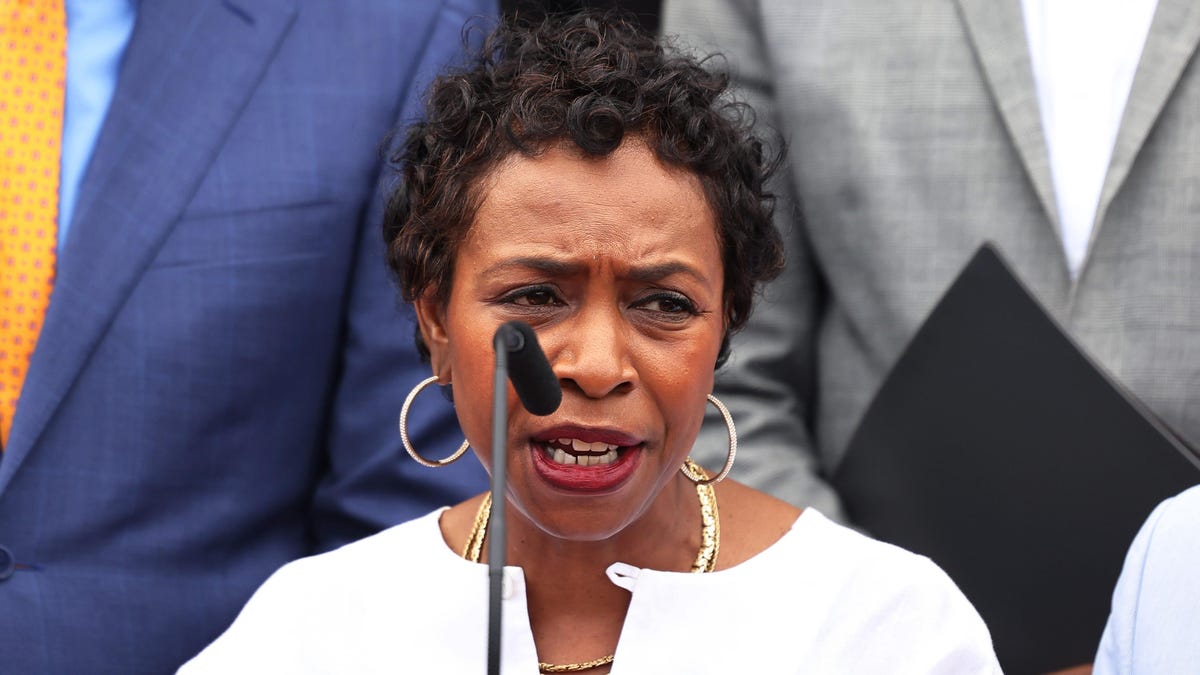

Rep. Yvette Clarke wasn’t exactly surprised when ex-President Donald Trump used an AI voice cloning tool to make Hitler, Elon Musk, and the Devil himself join a Twitter Space to troll Florida governor Ron DeSantis earlier this year. The former president wasn’t fooling anyone with the doctored screenshot, but Clarke worries similar political deepfakes will be weaponized to “create general mayhem” in what’s already shaping up to be a maddening 2024 election season. Without proper disclosures, Clarke, who has spent years warning of the danger of unchecked AI systems, says a skilled agent of chaos could even cause voters to stay home on election day, potentially influencing an election’s outcome.

“There are other folks in different laboratories, tech laboratories if you will, creating the ability to destroy, deceive, disguise, and create general mayhem. That’s some people’s 24-hour job. Congress hasn’t been able to keep up with a regulatory framework to protect the American people from this deception,” Clarke said in an interview with Gizmodo.

The New York Democrat believes her recently introduced REAL Political Ads Act could solve part of that problem. The relatively straightforward legislation would amend the Federal Election Campaign Act of 1971 to add language requiring political ads to include a disclosure if they use any AI-generated videos or images. Those updates, Clarke said, are critical to ensure campaign finance laws keep pace with AI and prevent further erosion of trust in government.

“My idea is very simple. It doesn’t brush up on First Amendment rights. It’s simply to disclose, where political ads are concerned, that the advertisement was generated by artificial intelligence. Now, what happens after that remains to be seen. I’m not here to stifle innovation or creativity, or one’s First Amendment rights,” she said. She added that she is “not necessarily convinced” that Congress will have a serious discussion of the ramifications of AI, but she is hopeful.

Since Clarke introduced the legislation in early May, Trump, DeSantis, and even the Republican National Committee have all released ads and other political material fueled by generative AI. Though most of the examples so far have come from one end of the political spectrum, Clarke says the issue affects everyone because “deepfakes don’t have a party affiliation.”

Others are taking notice. On the state level, legislators in more than half a dozen states have proposed legislation targeting deepfakes used for everything from political campaigns to child sexual abuse material. On the federal level, the White House recently met with leaders from seven AI companies and secured voluntary agreements from them on external testing and watermarks. Clarke applauded the efforts as a “strong first step” in ensuring safeguards for society.

None of this is necessarily news to Clarke though. The congresswoman was one of the first lawmakers to propose legislation requiring creators of AI-generated material to include watermarks. That was back in 2019, long before models like OpenAI’s ChatGPT and Google’s Bard became household names. Four years later, she is hoping her colleagues have taken note and will seize on the opportunity to get ahead of deepfakes and AI-generated content before it’s too late.

“Imagine if we wait for another election cycle. By then there will be some other iteration. It will be AI 2.0 or 2.5 and we’ll be catching up from there,” she said.

The following interview has been edited for length and clarity.

Deepfakes have been circulating in politics and the public consciousness for some time. Why did you decide this was the right time to introduce the REAL Political Ads Act?

Innovation has taken us to a whole new level with artificial intelligence and its ability to, mimic, to fabricate scenarios, visually and audibly, that pose a real threat to the American people. I really knew that after seeing the RNC [Republican National Convention’s] depiction of Joe Biden that it was out there, that the weaponization was here. In that case, they put a small disclosure on their video but I know that there will be others who won’t be as conscientious. There will be those who intentionally throw up fabricated content to disrupt, confuse, and create general mayhem during the political season. We kinda got a sense of that during the era of Cambridge Analytica and the 2016 elections so this is the next-gen if you will.

You’ve been at the forefront of proposed deepfake legislation for years now, often ahead of most of the other members of Congress. What do you think of the sudden explosion of interest in generative AI and new evolving deepfake capabilities?

It was inevitable. While I’m here legislating, there are other folks in different laboratories, tech laboratories if you will, creating the ability to destroy, deceive, disguise, and create general mayhem. That’s some people’s 24-hour job. So I’m not surprised, but Congress hasn’t been able to keep up with a regulatory framework to protect the American people from this deception. The average person isn’t thinking about AI and isn’t necessarily going to question something that jumps into their social media feeds or is sent in an email by a relative.

I really feel like Congress has got to step up and at least put a floor to conduct on the internet. Otherwise, we are really dealing with the Wild Wild West. My idea is very simple. It doesn’t brush up on First Amendment rights. It’s simply to disclose, where political ads are concerned, that the advertisement was generated by artificial intelligence. Now, what happens after that remains to be seen. I’m not here to stifle innovation or creativity, or one’s First Amendment rights.

What’s the nightmare scenario you see if deepfakes are allowed to influence politics unchecked?

We don’t want to be caught flat-footed when an adversary outside of our particular political dynamic, or an international adversary, decides to detonate a deepfake ad and injects it into our political discourse to create hysteria among the American people. Imagine receiving a video on election day that shows flooding in a particular area of the country and it says, today’s elections have been canceled so people don’t vote. It’s not farfetched. I think there are a whole host of nefarious characters out there both domestically and internationally that are looking forward to disrupting this election cycle. This will be the first election cycle where AI generative content is out there to be consumed by the American people.

We’re slowly seeing an erosion of trust and integrity in the democratic process, and we’ve got to do everything we can to undergird and strengthen that integrity. The last few election cycles have sent the American people for a loop. And while some people are determined to hold onto this democracy with all thier might, there are a lot of folks who are becoming disaffected as well.

Deepfake technology has gotten much better in recent years but there also seems to be a stronger appetite for conspiratorial thinking as well. Are these two elements potentially feeding off of each other?

We’ve reached a new high or a new low depending on how you look at it of propagandizing our civil society. The problem with deepfakes is that you can create whatever scenario you want to validate whatever theory you have. There doesn’t have to be a factual or scientific basis for it. So those who want to believe that there’s a child trafficking ring and a pizzeria in Washington D.C. can actually depict that through a deep fake scenario.

I think the abrupt adoption of virtual lives as a result of the pandemic opened us up to much more intrusion into our lives than ever before. Some people dibbled and dabbled with search engines and with social media platforms before but it wasn’t really what they did all day. Now, you have folk who never go into an office. Technology firms know more about you now.

What type of political appetite is there amongst your colleague to move forward with AI regulation generally?

They’re far more conscious of it. I think having the creators of AI systems come out with concerns about how their technology can be used not only for good but also for nefarious interests, has also percolated amongst my colleagues. I’m getting lots of feedback from colleagues, primarily younger colleagues and colleagues who are Democrats. That’s not to say there aren’t those on the other side of the aisle who have taken notes. I just find that the agendas for what we are pursuing in terms of policy are divergent.

Divergent in what ways?

Well, right now my colleagues on the Republican side are doing culture wars and we’re doing policy. They [the Republican Party] dominate the agenda. We should have a law of the land around data privacy by now. That is the nugget, the algorithm that then creates the AI. It’s all built upon the individual data that we are constantly pumping into the technology. Our behavior is being tracked and based on that products are being developed and our own information is used against us.

This year both the Trump and DeSantis presidential campaigns have already published deepfake political material, some more obviously works of parodies than others. What do those examples tell you about the moment?

We’re here. At the end of the day my legislation didn’t come out of thin air, we knew what the capabilities of the technology were. Now we’re in the age of AI where the amount of mega data that goes into systems is at your fingertip. I think about all of the emails and social media links that I receive in a day. People will be inundated with political content and I’m sure a certain portion of it will be AI-generated.

How confident are you that Congress can make meaningful strides in limiting deepfakes before it’s too late?

Well, I’m going to do everything I can to continue to shine a light on and address this issue through legislation. My proposed legislation is a simple solution and it needs to be bipartisan because deepfakes don’t have a party affiliation. It can impact anyone at any time, anywhere. I’m not necessarily convinced that we’ll have an opportunity to take it up in this Congress. My hope is that in the next Congress, we’ll have a suite of policies that really addresses our online health.

I’m open to any point in time that it gets done, but just imagine if we wait for another election cycle. By then there will be some other iteration. It will be AI 2.0 or 2.5 and we’ll be catching up from there. And it’s not relegated to one political party or another. As a matter of fact, it’s not even relegated to just Americans. Any source from anywhere in the world can be, disruptive. The North Koreans can get involved or the Iranians or the Chinese, or the Russians. We’re all connected.