The era of bad robot music is upon us. Adobe is working on a new AI tool that will let anyone be a music producer—no instrument or editing experience required.

The company unveiled “Project Music GenAI Control”—a very long name—this week. The tool allows users to create and edit music by simply typing in text prompts into a generative AI model. These can include descriptions like “powerful rock,” “happy dance,” or “sad jazz,” Adobe explained.

Project Music GenAI Control will then create an initial tune based on the user’s prompt, which they can also edit using text. Adobe said users can, for instance, edit the generated music’s intensity, extend the length of the music clip, or create a repeatable loop, among others.

The target audience for this new tool includes podcasters, broadcasters, and “anyone else who needs audio that’s just the right mood, tone, and length,” said Nicholas Bryan, a senior Adobe research scientist and one of the creators of the technology.

“One of the exciting things about these new tools is that they aren’t just about generating audio—they’re taking it to the level of Photoshop by giving creatives the same kind of deep control to shape, tweak, and edit their audio,” Bryan stated in an Adobe blog. “It’s a kind of pixel-level control for music.”

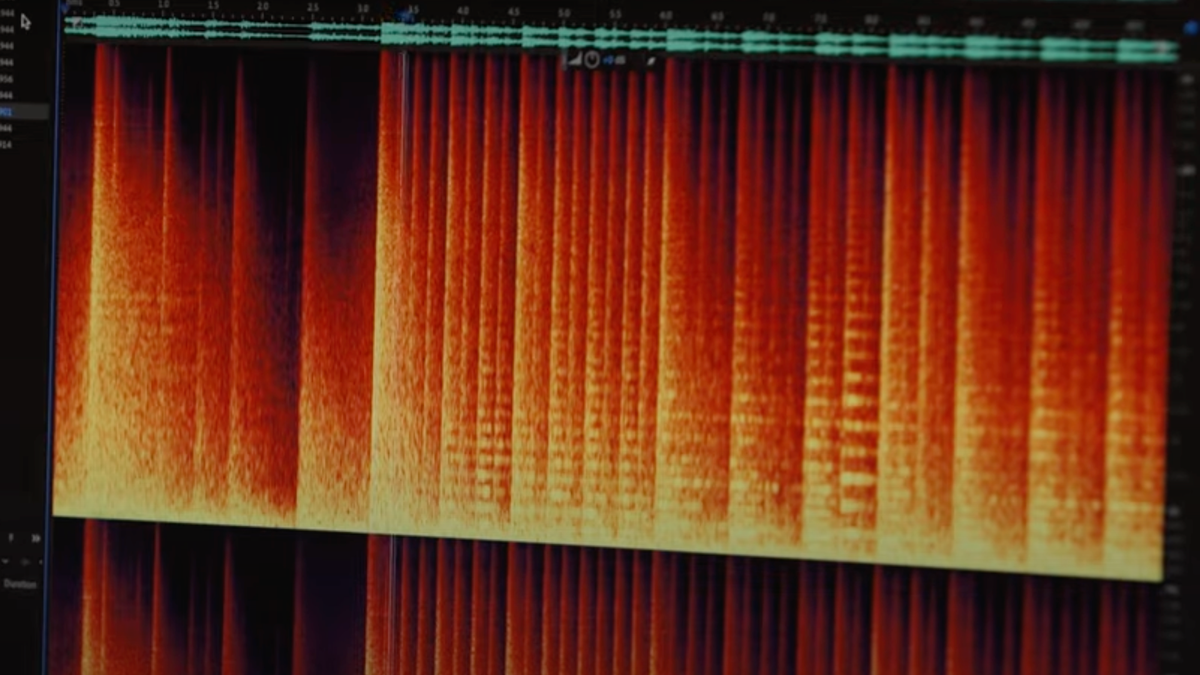

Adobe uploaded a video that showed how Project Music GenAI Control worked, and it was scary how easy it was for the tool to create music. It also appeared to work very fast. While the music it generated isn’t going to win any Grammys, it’s something I can definitely imagine hearing in the background of YouTube videos, TikToks, or Twitch streams.

That’s not exactly a good thing. AI has reverberated through professions like writing and acting, forcing workers to take a stand and prevent their livelihoods from being stolen. Comments on the company’s YouTube video echoed these concerns and skewered the company for creating “music written by robots, for robots” and “good corporate cringe.”

“Thanks Adobe for trying to find even more ways for corporations to screw creatives out of jobs. Also, from what artists did you steal the material that you used to train your AI on?” one user wrote.

When asked for comment by Gizmodo, Adobe did not disclose details about the music used to train its AI model for Project Music GenAI Control. However, it did point out that with Firefly, its family of AI image generators, it had only trained its models on openly licensed and public domain domain where copyright had expired.

“Project Music GenAI control is a very early look at technology developed by Adobe Research and while we’re not disclosing the details of the model quite yet, what we can share is: Adobe has always taken a proactive approach in ensuring we are innovating responsibly,” Anais Gragueb, an Adobe spokesperson, told Gizmodo in an email.

Music is art and inherently human. As such, we must be careful when it comes to new tools like Adobe’s—or else risk a future where music sounds as empty as the machines generating it.

Update 3/1/2024, 5:56 p.m. ET: This post has been updated with additional comment from Adobe.