Benj Edwards / Stable Diffusion

On Thursday, OpenAI announced a new beta feature for ChatGPT that allows users to provide custom instructions that the chatbot will consider with every submission. The goal is to prevent users from having to repeat common instructions between chat sessions.

The feature is currently available in beta for ChatGPT Plus subscription members, but OpenAI says it will extend availability to all users over the coming weeks. As of this writing, the feature is not yet available in the UK and EU.

The Custom Instructions feature functions by letting users set their individual preferences or requirements that the AI model will then consider when generating responses. Instead of starting each conversation anew, ChatGPT can now be instructed to remember specific user preferences across multiple interactions.

In its introductory blog post, OpenAI gave examples of how someone might use the new feature: “For example, a teacher crafting a lesson plan no longer has to repeat that they’re teaching 3rd grade science. A developer preferring efficient code in a language that’s not Python – they can say it once, and it’s understood. Grocery shopping for a big family becomes easier, with the model accounting for 6 servings in the grocery list.”

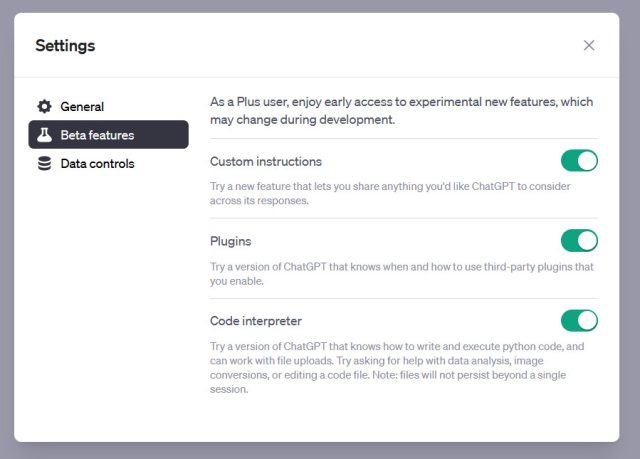

Ars Technica

If you’d like to try it out, you’ll need to do some digging in Settings. Web users of ChatGPT should click on their name in the lower-left corner, navigate to “Settings,” then “Beta Features,” and click the switch beside “Custom Instructions.” A new “Custom Instructions” option will appear in the menu going forward. For those using iOS, tap “Settings,” then “New Features,” and toggle “Custom Instructions” to the on position.

If you don’t see the option in your ChatGPT settings, then your account region hasn’t received access to the feature yet.

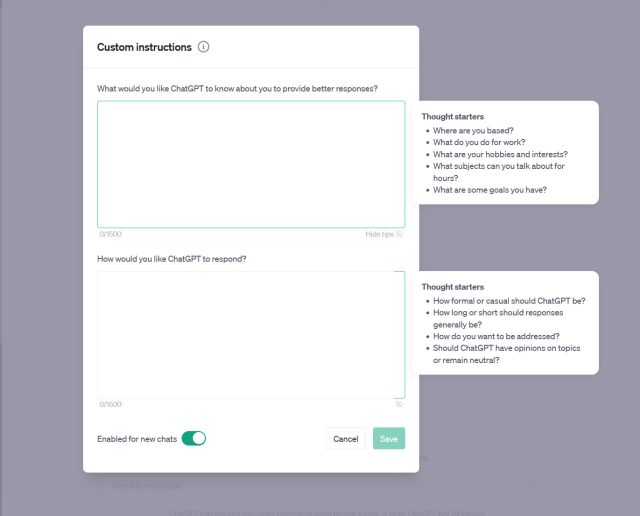

After activating the Custom Instructions feature and clicking its option in Settings, you’ll see two text input fields: One asks what ChatGPT should know about you, and the other asks, “How would you like ChatGPT to respond?”

Ars Technica

OpenAI says the first input field is designed to collect contextual information about the user that can help the model provide better responses. It suggests typing information related to the user’s profession, preferences, or any other information that would be useful for the model. For instance, “I work on science education programs for third-grade students.”

The second field is used to gather specifics on how users would like the AI model to respond. This allows users to guide the model’s output style or format. For example, users could instruct ChatGPT to present the information in a table format, outlining the pros and cons of options in a result.

In testing the feature, Ars found that the Custom Instructions feature does flavor the results in a similar way to providing the same information in a prompt. In that way, it seems to work as a prompt extension, although we are not sure if the characters (1,500 in each prompt) count against the overall context window. The context window is currently limited to 4,096 tokens (fragments of a word), and it determines the overall short-term memory, so to speak, of the current conversation. We’ve reached out to OpenAI for clarification.

Custom Instructions also works with plugins, says OpenAI. For example, “if you specify the city you live in within your instructions and use a plugin that helps you make restaurant reservations, the model might include your city when it calls the plugin.” But the company also warns in the ChatGPT interface that custom instructions, like prompts, are shared with plugin providers.

The company says that while custom instructions will be applied to all future conversations once enabled, the new feature may not work perfectly during the beta test. ChatGPT might overlook instructions or apply them in contexts where they were not intended. For now, OpenAI hopes to collect feedback on the feature from users during the beta period.